of Pointclouds and Spatial Tracking

- martin92147

- Apr 11, 2019

- 4 min read

Lately I've been toying with various ways to rebuild a real environment in a virtual space, and after reading about someone's exploration with some open source tools I decided to feed visualSFM some images and see what comes out. SFM, or Structure From Motion is a photogrammetry technique that takes a moving camera; ie two+ pictures of the one thing, and infers where things are in relation to it. Similar to the reason you have two eyes, with SFM you can have n eyes.

I had plenty of footage from my drone using a keychain camera, in this case the Moebius v1:

Here's some frames from a 4 minute acrobatic flight in a park:

At first I got very little out of it, as the super wide lens, ridiculous camera angles and low quality CMOS & compression was distorting just about everything good about the image. So I decided to up the ante and cropped it to a square to discard the bulk of distortion, run a sharpening filter in Lightroom & correct the barrel distortion, then write out every 5th frame or so and manually delete anything that looked too motion-blurred.

If running it on 100 frames meant that every image had to be compared with 99 frames, now every image had to be compared to 1788! It took two days to do feature detection, even with CUDA on a GTX980. But after all this I ran the bundle adjustment / 3d reconstruction and magic started to appear:

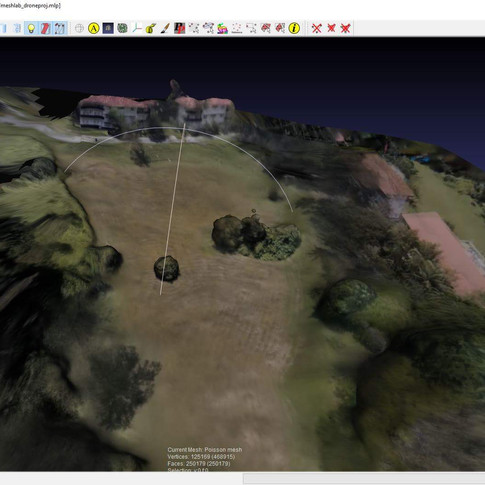

It was *actually* able to see where the drone was while filming, you can see the loops and spins appear as it loaded and corrected each camera! Awesome! So lets mesh the pointcloud in meshlab with Poisson reconstruction:

OK, mind blown. It also appears that textures bring a huge level of believabilily to what's otherwise a blob, who woulda thought? It even has the right colour tone for the appropriate piece of grass....

At this point, and with a few more tests, there are a few ingredients that have proven to be successful in the process:

If you have a wide FOV undistort it manually and crop it in to remove extreme distortion. SFM accounts for some lens distortion but not all

EXIF data is preferrable, the above step might hinder the process, but if you have a clean image and exif then don't process it

Changes in scale and location help, but having images that run in a sequence (spatially) work very well

Sequential feature matching- instead of comparing an image to n images, if you have a solid sequence then you just compare it to the ones in front and behind, saving time.

Heavily textured surfaces work best. Featureless walls are terrible. That's why Facebook's SLAM tracking demos are of a wooden table.

SFM can exclude moving objects, but it's probably wise to avoid them altogether

And with that in mind, I set about finding more things to scan... Starting of course with my living room:

Turns out three.js becomes particularly handy here as it's easy to put online and show people from my phone. Here's the living room as a webGL render, you can drive the camera around by clicking and dragging, apologies for the average iframe embed, if it doesn't work you can see the result here.

And here's how it is to step into the VR space while simultaneously in the Real space (and wearing a headset). Trippy:

If you think that's weird, try orienting and scaling the room incrementally to match how you think it should be in reality. Mixed reality exploration at it's best!

So after playing with SFM for a while, I decided to see what else could do the same thing, seeing as inside-out tracking is a key component of what Augmented Reality will need to be functional. Meanwhile, a workmate announced he'd built a lidar scanner, it's running on an Arduino and outputs point data via the serial interface/dialogue as a CSV. So far there's a stepper motor on the horizontal pan, and soon there will be one replacing the server for tilt, looking forward to increased accuracy and resolution!

So the glowing dots are the lidar points, and I used meshlab to do another poisson surface reconstruction, which gave relatively uniform if low res surfaces. Much better than the blobby/wavey ones from before, but with no texture. So I just glued the SFM result to the lidar, seems to work pretty well.

We also tried out a google Tango device, with underwhelming results:

As you might be able to see, the tracking drifts as it doesn't do much of an 'absolute' lock to features, instead error compounds as it appears to focus on the relative change in position from the last grabbed features.

Getting back to the LIDAR/SFM combo, the neat thing about this is that I can put a 3d camera in the virtual scene, and the real-world features should match up if the lens settings are close to that of a projector, bingo:

Now, to shatter the wall with a voronoi pattern, or model some sort of fungal vines, maybe set it on fire or dribble water down it, or maybe just a non divergent vector field flowing around features? Really, the question is "how hard will it be to paint".

Looking forward to exploring more ways of screwing around with this geometry, and if we ever put paint to wall, I'll post the results here.

Comments